Microsoft Azure SQL data storehouse is a cloud-oriented and scaled-down database strong enough to analyze huge volume of data, the duo of relational and the non-relational. The simplicity of usage, as well as scalability of Redshift, is certainly a big benefit of this method. The performance of the data storehouse looks just like the high-end databases. The query capacity can be tremendously enhanced by choosing a few divisions of columns instead of the whole record. Similarly, one exciting quality of Amazon Redshift is its columnar database, and the implication of this is that every record, rather than being stored as a special block of data, gets saved in standalone columns. This database machine works using PostgreSQL. However, it is enhanced for fast performance processing and analysis of big datasets. Amazon Redshift acts as a linkage, a database organizing system, and plays the same role with the everyday RDBMS, which looks like OLTP. The cluster of slices contains a number of databases. The compute node is divided into compartments, and each compartment is given a part of the node’s storage and memory, and there, it analyzes the workload given to the node – the key node controls the sharing of data of the workload to the compartments and thereafter work together to finish up the task. The least storage capacity for each node is 160GB, but it can be improved to 16TB to contain huge data. Scalability is much easier by optimizing the compute nodes or attaching new nodes. Every compute node is assigned a personal CPU, memory as well as storage space.

Amazon redshift data warehousing code#

The compute nodes process the compiled code forwarded by the key node and forward the result for the last compilation. The key node will not share SQL points to the compute node except if they occur only on the key node. Subsequently, it is analyzed and build execution plans to undertake database relations, and with the execution plan, it arranges the code, shares the code compiled to compute nodes and gives a portion of the data to every node. The key node takes up links from client-sourced applications and sends the work to the compute node. The basic facilities of Amazon Redshift data storeroom is an aggregate, and it is made up of a key node and several compute nodes. It stores and process data on several compute nodes.

Amazon redshift data warehousing software#

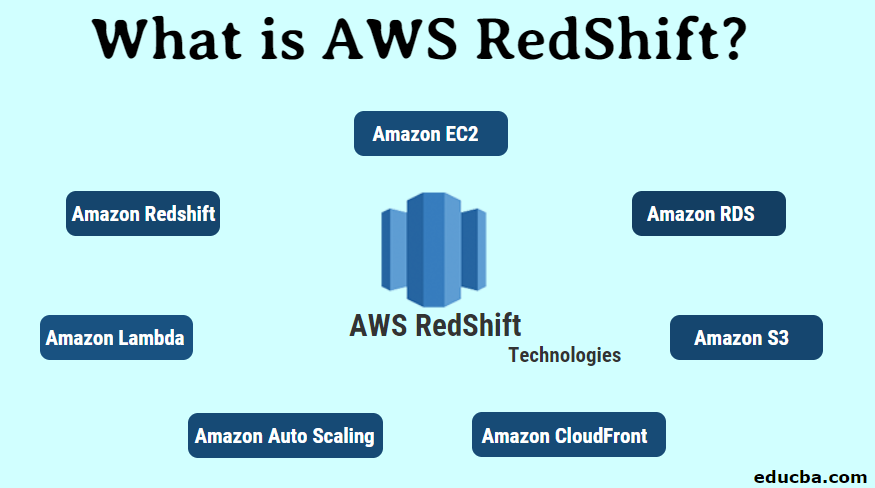

The machine used by Amazon Redshift works fine with SQL, MPP, as well as data processing software to improve the analytics process. Amazon Redshift is a swift, completely-managed, petabyte-level data storehouse that eases and reduces the cost of processing every data, making use of available business intelligence facilities. Reports show that Amazon Web Services (AWS) is usually taken as the best data clouding storeroom Facility Company. Storing data in the cloud is one of the cheapest means for firms to leverage modern technology at the least cost possible to procure, set-up, and organize the necessary hardware, software, and facilities. A data pool lodged in the cloud is a data hub that can provide information for internet users from any part of the world, just like a database service (DBaaS). To satisfy this demand, a data virtualization option can be employed to ease the transfer and joint existence of the two data storehouse networks even as transfer to cloud proceeds.Ĭlouding data pool arose from the aggregation of three key trends – big variation in data sources, size and complication the necessity of getting data and analysis and enhanced the technology that improved the way data is accessed, processed and saved.Ĭonventional data storehouse was not made to contain the size, diversity, and complications of the data available today. One of the key challenges of migrating to the cloud is the timeframe required to “cloud” the duo of “on-premises” and cloud data storehouse mechanism as it is good practice to move one after the other. More often than not, the storage and compute facilities needed to analyze the data are grossly inadequate, and this results in ceasing or crashing systems. Those who run analysis must tarry for a while or sometimes days for data to move into the data storeroom before they can access it and analyze the same. The traditional data hub network is disaggregated among a bug number of firms handling huge and varied data sets but in a highly safe and complicated pattern to react with the agility presently required by companies. In pursuance of making a sound decision, obtain insights and attain a competitive edge, companies must correctly interpret their data in due time.

This straddles a diverse array of sources, covering cloud-oriented apps, or even firm data markets. These days, firms have larger data pools aggregated from diverse sources more than ever before.

0 kommentar(er)

0 kommentar(er)